How Generative AI May Perpetuate Type’s Biases

In March 2021, earlier than mainstream pleasure round generative synthetic judgement exploded, a couple of researchers published a paper at the manner biases can flip up in pictures created via AI.

With one AI device, they made 5 male-appearing and 5 female-appearing faces. Then, they fed them into any other AI device to finish with our bodies. For the feminine faces, 52.5 % of the pictures the AI returned featured a “bikini or low-cut top,” they wrote. For the male faces, 42.5 % have been finished with “suits or other career-specific attire.”

Partial in AI — or in lieu within the knowledge those fashions are educated on — is a leading illness. There’s even a mantra: rubbish in, rubbish out. The speculation is that should you enter fallacious knowledge, the output will mirror the ones flaws. Since the generative-AI gear to be had have usually been educated on vast volumes of information scraped off the web, they’re prone to mirror the web’s biases, which will come with all of the mindful and subconscious biases of nation. The researchers guessed their output resulted from “the sexualised portrayal of people, especially women, in internet images.”

Type will have to pay related consideration. Because it starts the use of generative AI for the entirety from producing campaign imagery to powering online shopping assistants, it dangers repeating the discrimination according to race, era, frame sort and incapacity that it has spent the era a number of years loudly claiming it needs to exit era.

As an example, once I entered the steered “model in a black sweater” in DreamStudio, a business interface for the AI symbol generator Strong Diffusion, the effects depicted slim, white fashions. That was once the case for many, if no longer all, of the fashions each date I attempted it. Within the hive thoughts of the web, that is nonetheless what a type seems like.

Ravieshwar Singh, a virtual model fashion designer who has been looking to carry consciousness of the problem, even staging a minor protest on the recent AI Fashion Week, stated the flow year is particularly impressive for preventing those issues.

“What we’re seeing now is the construction of these norms in real-time with AI,” he stated.

Except for now manufacturers received’t have the ability to fall again at the justifications they’ve impaired within the era for no longer casting positive kinds of fashions or failing to constitute other teams. The place they could have prior to now claimed they couldn’t in finding the correct curvy type, now they’re ready to generate no matter glance they would like, Singh identified. Hour they could have claimed within the era that generating a area of samples to suit a area of our bodies was once prohibitively sophisticated or pricey, now there’s disagree primary added value or complexity. (It does carry the homogeneous factor of whether or not manufacturers will have to be the use of AI in lieu of hiring human fashions, however the fact is ignoring the technology won’t make it disappear.)

“So then the question to me becomes, ‘Why are we making these choices in the first place?’” Singh stated.

There are elements past the era at play games. Manufacturers are frequently looking to provide an aspirational symbol that parrots what nation extra extensively deems fascinating. At the alternative hand, model may be extra influential than maximum alternative industries in defining what “desirable” seems like.

For the trade to deviate from, and in the long run shift, its paradigms will require residue idea and struggle. It’ll be as much as the folks manufacturers and creatives to introduce extra variety, and there’s disagree oath that may occur. Type has tended to withstand even little adjustments within the era, and if it approach extra paintings, there might be those that received’t make investments the struggle, that means model would advance on reinforcing the similar patterns.

The tech trade continues to be suffering with its personal problems round partiality in AI. There are diverse well-documented examples of AI treating white males because the default, with repercussions like voice recognition not working well for women or image recognition mislabeling Black men. Generative AI provides its personal dangers, like perpetuating unfavourable stereotypes or erasing other teams simply by no longer together with them. One factor with some symbol turbines is that they may be able to default to a white man for just about any prompt, sure or unfavourable.

Tech professionals and researchers imagine one possible way to deal with the problem is reinforcement studying from human comments, a method that, true to its title, comes to a human providing an AI model feedback to lead its studying in a desired course — with out the human having to specify the required consequence.

“I’m optimistic that we will get to a world where these models can be a force to reduce bias in society, not reinforce it,” Sam Altman, leading govt of OpenAI, the corporate in the back of ChatGPT and the DALL-E symbol generator, told Rest of World, a world tech information web site, in a up to date interview.

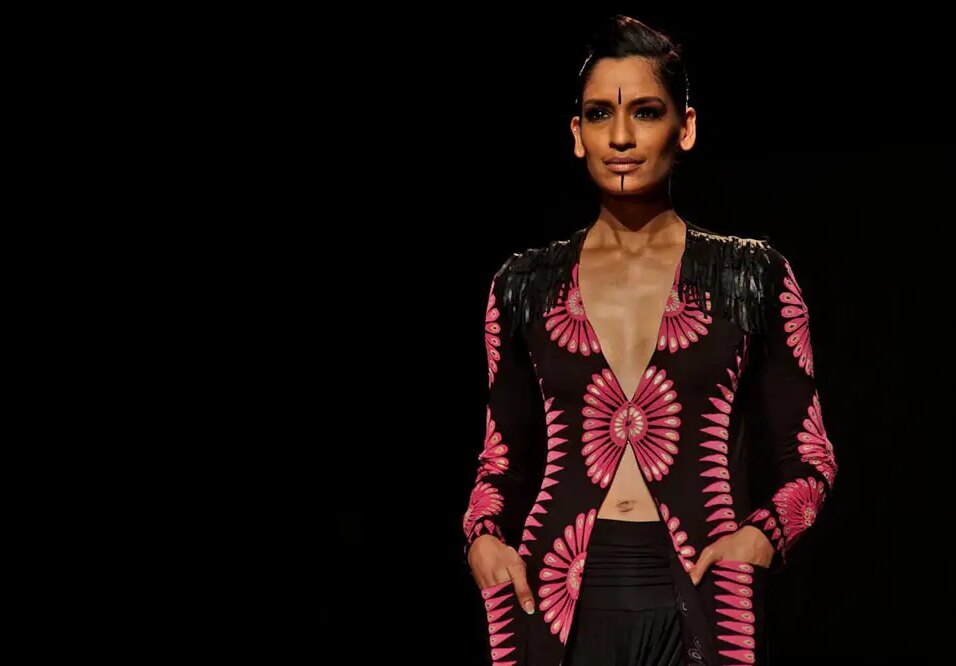

Singh believes AI will have a good affect on model, too. If anyone creates an AI marketing campaign with a South Asian type, or contains anyone with a frame sort that hasn’t been model’s same old within the era, a casting director would possibly see it and get the theory to do the similar in a bodily casting.

First, even though, model corporations the use of generative AI wish to assume past the default selections historical past and the era are making for them.

Leave feedback about this